The Ethics of Efficiency: How to Write a Literature Review Ethically Using AI Tools

The blank cursor is the academic’s oldest enemy. We’ve all been there—sitting in a dimly lit room with forty browser tabs open, three half-empty cups of coffee, and a mounting sense of dread about synthesizing 50 years of research into a cohesive narrative.

Then came Generative AI. Suddenly, the mountain looks like a molehill. But as the barrier to entry for writing falls, the bar for integrity rises. Learning how to write a literature review ethically using AI tools isn’t just about saving time; it’s about protecting your reputation and the sanctity of your research.

If you’re worried that using AI makes you a “cheater,” let’s clear the air: AI is a high-powered bicycle for the mind. It helps you get where you’re going faster, but you still have to do the pedaling, steering, and navigation.

The New Reality of Academic Synthesis

The traditional literature review process is, frankly, grueling. You find papers, read abstracts, skim results, take notes, and then try to find the “red thread” that connects them all. It’s a cognitive marathon.

AI tools like Consensus, Elicit, and even ChatGPT have fundamentally changed this workflow. They can summarize dense PDFs in seconds and find connections between disparate studies that might take a human weeks to spot.

However, the “black box” nature of these tools creates a massive ethical gray area. If an AI summarizes a paper and you copy-paste that summary, is it your work? If the AI hallucinates a citation (a common and dangerous occurrence), and you include it, who is responsible?

The answer is always you.

Why Ethics Matter More Than Ever in the Age of AI

We live in an era where “publish or perish” has met “prompt or perish.” The temptation to let a Large Language Model (LLM) do the heavy lifting is immense. But ethical writing isn’t just a moral hoop to jump through—it’s the foundation of scientific progress.

- The Risk of “Ghost Research”

When you rely too heavily on AI to interpret data, you risk losing the nuance. A literature review isn’t just a summary; it’s a critical evaluation. AI is great at the “what,” but it struggles with the “so what?” and the “why?”

- Intellectual Property and Ownership

Who owns the words generated by a machine? While legal frameworks are still catching up, academic institutions are clear: if you didn’t think it and you didn’t write it, you must disclose it.

- The Hallucination Trap

AI models are predictive, not factual. They are designed to provide the most likely next word in a sequence, not the most accurate one. In a literature review, a single “hallucinated” statistic can invalidate your entire thesis.

A Step-by-Step Framework for Ethical AI Integration

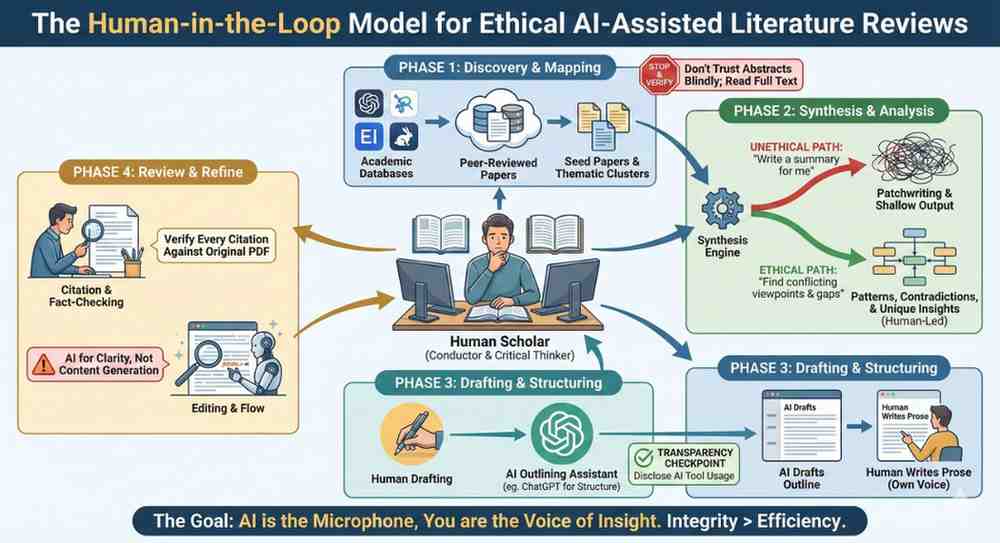

Writing a literature review ethically using AI tools requires a “Human-in-the-Loop” approach. You are the conductor; the AI is the orchestra.

Phase 1: Discovery and Mapping

Instead of using Google Scholar and getting 2 million results, use AI discovery tools like Elicit or ResearchRabbit.

The Ethical Way: Use these tools to find “seed papers.” Don’t just trust the AI’s summary of why a paper is relevant. Download the full text and verify the methodology.

The Shortcut to Avoid: Asking ChatGPT to “give me a list of the 10 most important papers on [Topic].” It will likely invent three of them.

Phase 2: Synthesis, Not Just Summarization

This is where most researchers stumble. There is a world of difference between “AI-summarized” and “AI-assisted synthesis.”

Try this: Feed the AI three different abstracts and ask: “What are the conflicting viewpoints regarding the methodology used in these three studies?” * The Goal: Use AI to find patterns, gaps, and contradictions. Then, write those findings in your own voice.

Phase 3: Drafting with a “Clean Wall”

The most ethical way to draft is to use AI for outlining, not prose.

- Use AI to help structure your H2s and H3s.

- Close the AI tool.

- Write the actual paragraphs yourself using your notes.

- Open the AI tool again to help with transitions or to find a more precise word.

Navigating the "Gray Areas": Common Mistakes

Even well-intentioned researchers fall into these traps. Awareness is your best defense.

Relying on “AI Detectors”

Let’s be honest: AI detectors are notoriously unreliable. They often flag non-native English speakers or very formal academic prose as “AI-generated.” Don’t rely on them to prove your innocence, and don’t assume a “0% AI” score means your work is ethical. Integrity is about the process, not the output.

The “Salami Slicing” of Summaries

Some writers take an AI summary, change three words, and call it their own. This is essentially “patchwriting,” a form of plagiarism. If the structure of the thought belongs to the AI, you are on thin ice.

Ignoring Bias

AI models are trained on existing literature, which often contains Western, male-centric, or publication-biased data. If you use AI to “summarize the field,” you might accidentally be perpetuating those same biases without realizing it.

Practical Tips for Staying on the Right Side of the Line

If you want to use AI responsibly, you need a personal code of conduct. Here is how I recommend approaching it:

- Keep a “Prompt Log”: Record which tools you used and what you asked them. This transparency is invaluable if your methodology is ever questioned.

- The 80/20 Rule: 80% of the intellectual heavy lifting (critical analysis, identifying gaps, forming the thesis) must come from you. The AI can handle the 20% that is administrative or organizational.

- Verify Every Citation: Never, under any circumstances, include a citation provided by an LLM without looking at the original PDF first.

- Disclose, Disclose, Disclose: Check your journal’s or university’s policy. Most now require a statement like: “The author used [Tool Name] for the purpose of [e.g., grammar checking/outlining/synthesizing initial summaries].”

- [Table: Ethical vs. Unethical AI Usage in Literature Reviews]

Task | Ethical Use | Unethical Use |

Search | Finding related papers through citation mapping tools. | Asking AI to “invent” sources to fit your narrative. |

Summarization | Using AI to get the “gist” before reading the full paper. | Copy-pasting AI summaries directly into your draft. |

Brainstorming | Asking AI for potential themes or “gaps” in the literature. | Letting AI decide the entire scope and direction of your review. |

Editing | Using AI to improve clarity, flow, and grammar. | Asking AI to “Write a 500-word section on X in the style of a researcher.” |

The Myth of the "Easy Way Out"

There’s a pervasive myth that AI makes writing a literature review “easy.” In reality, doing it right with AI is almost as much work as doing it without. Why? Because you now have an extra layer of verification.

You aren’t just reading papers; you’re auditing the AI’s interpretation of those papers. If you find yourself spending less than an hour on your literature review, you aren’t using AI—you’re letting AI use you.

Is it worth it? Absolutely. AI can help you see the “big picture” of a field in ways our human brains struggle to do. It can help you organize thousands of data points into a coherent structure. But the “soul” of the review—the insight that moves the field forward—can only come from you.

Frequently Asked Questions (FAQs)

Can I use ChatGPT to write my literature review?

You can use it for brainstorming, outlining, and refining your language, but you should not use it to generate the actual content of the review. It lacks the critical thinking skills required for academic synthesis and is prone to hallucinating facts.

Is using AI for a literature review considered plagiarism?

It depends on how you use it. If you represent AI-generated text as your own original thought and writing, most institutions consider it a form of academic dishonesty or “AI-giarism.” If you use it as a research assistant and disclose its use, it is generally accepted.

How do I cite AI-generated content?

Most citation styles (APA, MLA, Chicago) now have specific guidelines for citing AI. Usually, you cite the tool as the “author” and the company (e.g., OpenAI) as the publisher. However, in a literature review, you should be citing the original research papers, not the AI that summarized them.

What are the best AI tools for literature reviews in 2026?

Tools like Consensus (which searches only peer-reviewed research), Elicit (the AI research assistant), and Scite.ai (which shows if a paper has been supported or contrasted) are currently the gold standard for ethical research.

Final Thoughts: The Future of the Human Scholar

Learning how to write a literature review ethically using AI tools is a career-long skill. As these tools become more sophisticated, the temptation to let them take the wheel will grow.

But remember: a literature review is a conversation between you and the researchers who came before you. AI is just the microphone. Don’t let the microphone do the talking.

Your unique perspective, your ability to spot a subtle flaw in a 2012 methodology, and your passion for your subject are things no algorithm can replicate. Use the tools to clear the busywork so you can focus on the brilliance.

If you want to understand how AI tools can help you in writing literature review, you will love these courses that are meant for researchers like you : https://thephdcoaches.com/courses/.

Final Thoughts on Mastering the Review

At the end of the day, learning how to write a literature review for a research paper is about developing a specific type of vision. You’re learning to see the connections between disparate ideas. You’re learning to see the “shape” of human knowledge and, more importantly, where the edges of that knowledge are fraying.

Don’t aim for perfection in your first draft. Write the “messy” version where you just try to get the ideas down. The clarity comes in the editing. By the time you finish, you shouldn’t just be an expert on what has been done you should be the most qualified person to explain what needs to happen next.

Writing a literature review is a marathon, not a sprint. Take it one theme at a time, stay organized, and remember: you aren’t just reporting on the conversation you’re joining it.

If you want to understand how AI tools can help you in writing literature review, you will love these courses that are meant for researchers like you : https://thephdcoaches.com/courses/.

About Dr. Tripti Chopra

Dr. Chopra is the founder and editor of thephdcoaches.blogs and Thephdcoaches Learn more about her here and connect with her on Instagram, Facebook and LinkedIn.

Our Featured Courses

Explore Our Courses

Empowering Scholars and Academics to Achieve Excellence Through Personalized Support and Innovative Resources

Our Featured E-Products

Explore Our Products

Empowering researchers with expert tools and personalized resources to achieve academic success.

Leave a Reply